This is important to understand because we often either need to simulate this behaviour or we use browser activity to simulate that behaviour for us. Now infinite scrolling requires HTTP requests to be made and new information to be displayed on the page. Infinite scrolling is a javascript orientated feature where new requests are made to a server and based on these either generic or very specific requests either the DOM is manipulated or data from the server is made available. Is there dynamically generated content?īy this we mean is there enough at a quick glance to know that the functionality of the website is interactive and most likely generated by javascript? The more interactivity on the website the more challenge the scrape. Logging in presents a specific challenge in web scraping, if a login is required this slows down the efficiency of the scrape, but also makes it far easy for your scraper to be blocked. To load new information which may or may not be accessible is important.

However, knowing if there is any javascript being implemented to manipulating the website. Almost all pages on the internet will be using HTML and CSS and there are good frameworks within python that deal with that easily. This often dictates how easy or difficult the scrape will be. Knowing how the website is built is a vital part to know early on in the process. How is the website built? How heavily is Javascript used? Knowing this helps plan out what types of functions will be needed to do the scrape.

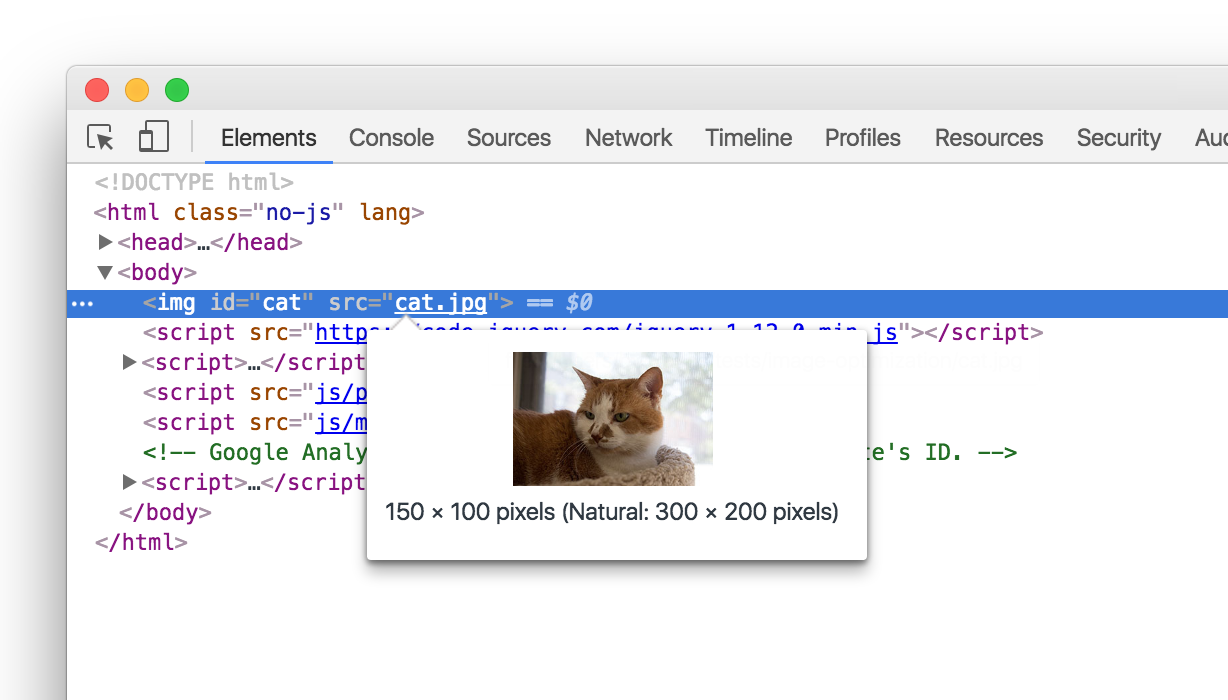

#Get request network inspector in chrome code#

You can imagine that information on one page is the easiest and the code will inevitably be more simple whereas nested pages of information will make many more HTTP requests and the code will be more complex as a result. When you first think of a website to extract data from, you will have some idea of the data you are wanting. Is the data on one page, several pages or through multiple click-throughs of pages? It is also the least talked about, which is why you’re here reading this! 1. This is the first and most important part of web scraping. In this article, we will focus on inspecting the website. Planning the data you require and their selectors/attributes from the page.There are three key areas to consider when looking to do web scraping Understanding the workflow of Web Scraping

#Get request network inspector in chrome how to#

How to leverage Chrome Tools for web scraping.How to quickly analyse a website for data extraction.To understand the workflow of web scraping.In this article, we will go through the process of planning a web scraping script. Understanding how to efficiently get what you want from a website takes time and multiple scripts. This is common when first starting out web scraping. So you have a website you want to scrape? But don’t necessarily know what package to use or how to go about the process. How to use ChromeDev Tools to your advantage

0 kommentar(er)

0 kommentar(er)